One key aspect of the AI Standards Hub’s strategy going forward – in addition to the Hub’s four functional pillars – is for activities to be structured around specific thematic areas. The first such area, and the Hub’s focus for the next six months, is ‘trustworthy AI’. This blog post introduces this area, sets out the background for selecting it, and provides a preview of the Hub’s programme of activities over the coming months.

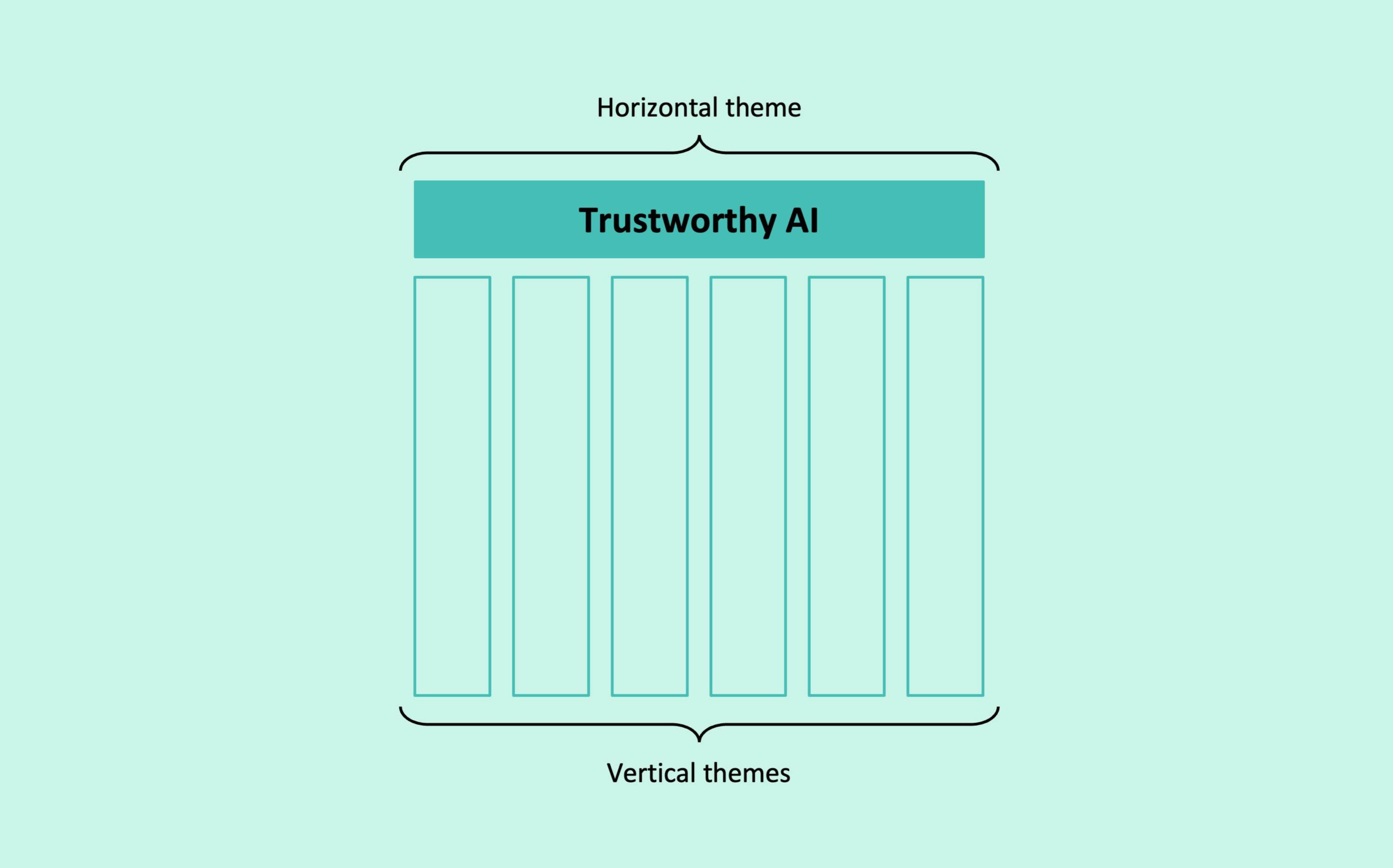

The stakeholder roundtables and surveys that we conducted while developing the Hub’s strategy demonstrated a clear need for the Hub to pursue both work that takes a cross-cutting horizontal perspective (considering questions that apply across different uses of AI) and work that takes a (sector or use case-specific) vertical perspective. The importance of both of these approaches is reflected in the AI standardisation landscape: as the ‘domain’ filter in our AI Standards Database illustrates, Standards Development Organisations (SDOs) are undertaking horizontal as well as vertical standards development projects in relation to AI.

In response to this need, the Hub’s activities will, over time, centre around a combination of horizontal and vertical thematic areas. Initially, the Hub will focus on trustworthy AI as a horizontal thematic area. Our choice of theme was motivated by the advanced stage of several horizontal AI standardisation projects, stakeholder interest in horizontal trustworthiness topics, as well as by the significance of cross-sector trustworthy AI questions in evolving policy and regulatory thinking in the UK and abroad.

The Hub will cultivate informed discussions on the extent to which horizontal approaches can tackle trustworthy AI challenges, illuminating the benefits as well as the limits of such approaches.

Why trustworthy AI?

Trustworthiness is a prerequisite for the safe and ethical adoption of AI technologies. Note that trustworthiness differs from trust – it is about whether the use of a technology is actually deserving of people’s trust in the first place. People can put their trust in a technology that is not trustworthy. The fundamental importance of trustworthiness – and the goal of trustworthy AI – is in enabling in technologies and preventing misplaced trust which can lead to harm.

Evaluating and communicating the trustworthiness of AI systems can be a challenge across all sectors and use cases. While there has been considerable work on developing both high-level principles and concrete practical solutions, further work is required at both levels. Work to bridge the two levels is also greatly needed. One challenge is that many sets of principles differ from or even contradict each other, making it difficult to understand how these principles should be interpreted and implemented. Satisfying abstract principles is also made more difficult by intrinsic mathematical limitations: for example, it is impossible except in narrow circumstances to simultaneously meet two popular definitions of fairness. Which definition is prioritised should depend on the application context, but few clear selection guidelines have emerged.

Standards can play a key role in fostering much needed coherence, operationalisation, and consensus around questions of trustworthy AI. Correspondingly, there is a wide and growing range of AI-related standardisation efforts that seek to address questions in this area. The potential of standards for achieving trustworthy AI is also reflected in current policy and regulatory developments, including the UK Government’s proposed framework for regulating AI and the EU’s proposed AI Act (which envisages the development of harmonised standards to support the requirements set out in the Act).

What is trustworthy AI?

The Hub is taking a deliberately broad perspective on the meaning of trustworthy AI, which will allow our work to be driven by the needs of the community. We understand trustworthy AI as the challenge of ensuring that AI systems are ethical, work as expected, and are used responsibly. None of these elements can be taken for granted: the design and development of AI systems can raise a host of ethical questions; guaranteeing that systems work as expected can involve significant difficulties (especially as systems increase in their complexity); and there are potentially serious risks if systems are used inappropriately or without adequate human oversight.

For the purposes of the Hub’s work, relevant considerations in addressing trustworthy AI, thus understood, include both technical (e.g., engineering choices) and non-technical (e.g., effective communication with users and affected communities) aspects of the ways in which AI systems are designed, developed, and used.

Given the diverse range of challenges for achieving trustworthy AI, it is helpful to approach the theme by between different characteristics that need to be present in order for AI systems and their use to be deemed trustworthy. The list of relevant characteristics is long and growing longer, but some of its more prominent entries include transparency, fairness, reliability, safety, security, and privacy. Ultimately, the specific requirements for trustworthy AI will depend on the diverse needs and perspectives of users and affected individuals in the contexts in which AI systems are being deployed.

What’s next?

Our engagement showed a strong demand for the Hub to pursue activities dedicated to specific trustworthiness topics rather than trustworthy AI in general terms, based on a shared perception that progress in this thematic area increasingly hinges on taking this kind of concrete and detail-oriented approach. Informed by this feedback, the Hub, over the next few months, will pursue activities dedicated to three such particular topics. Among other things, the Hub will bring the community together to examine what standards are currently being developed in relation to a given topic, to what extent these standards are aligned or divergent, and what gaps may be left unaddressed by the standards when compared to best practices that have been established in the broader responsible innovation landscape.

The three topics that the Hub will be looking at in detail over the next six months are as follows:

- Transparency and explainability: These are not only important values in their own right but also preconditions for achieving other trustworthiness characteristics, such as fairness and accountability. Transparency and explainability are complex topics with many unsolved challenges. The Hub will explore several of these challenges, including the frequent lack of agreed terminology, difficulties in determining the adequacy of different technical approaches to achieving explainability, and the need to translate information about the same AI system for vastly different audiences.

- Safety, security, and resilience: Product safety, cybersecurity, and resilience in non-AI contexts have long been established as important areas for standardisation. The Hub will consider the role of established standards in these areas when it comes to the AI context and examine central issues and challenges for achieving safe, secure, and resilient AI. This includes questions about general risk management frameworks and organisational processes for AI as well looking at specific risks such as model drift or model stealing.

- Uncertainty quantification: AI systems that rely on machine learning can involve significant forms of uncertainty, stemming from sources such as random effects in the data and uncertainty about the parameters of the model. These data and parameter issues can propagate through a system, leading to uncertainty about its outputs. Uncertainty quantification aims to measure output uncertainty by accounting for all sources of uncertainty in the model. One important area for work not currently captured by most methods is uncertainty in the model’s input variables.

Activities for each topic will take a variety of forms and include blog posts, webinars, workshops, and online forum discussions. To receive updates about these activities, please sign up for our email newsletter. If you are interested to contribute to the direction of these activities at an early stage, our Trustworthy AI forum provides an opportunity for doing so.

Thematic kick-off at the launch event

The AI Standards Hub launch event kicked off the Hub’s work on trustworthy AI. The event, which explored the points covered in this blog post in greater detail and featured a panel discussion on the role of standards in the context of trustworthy AI with speakers from techUK, Digital Catapult, the MHRA, and CDEI, is available to watch below.

0 Comments