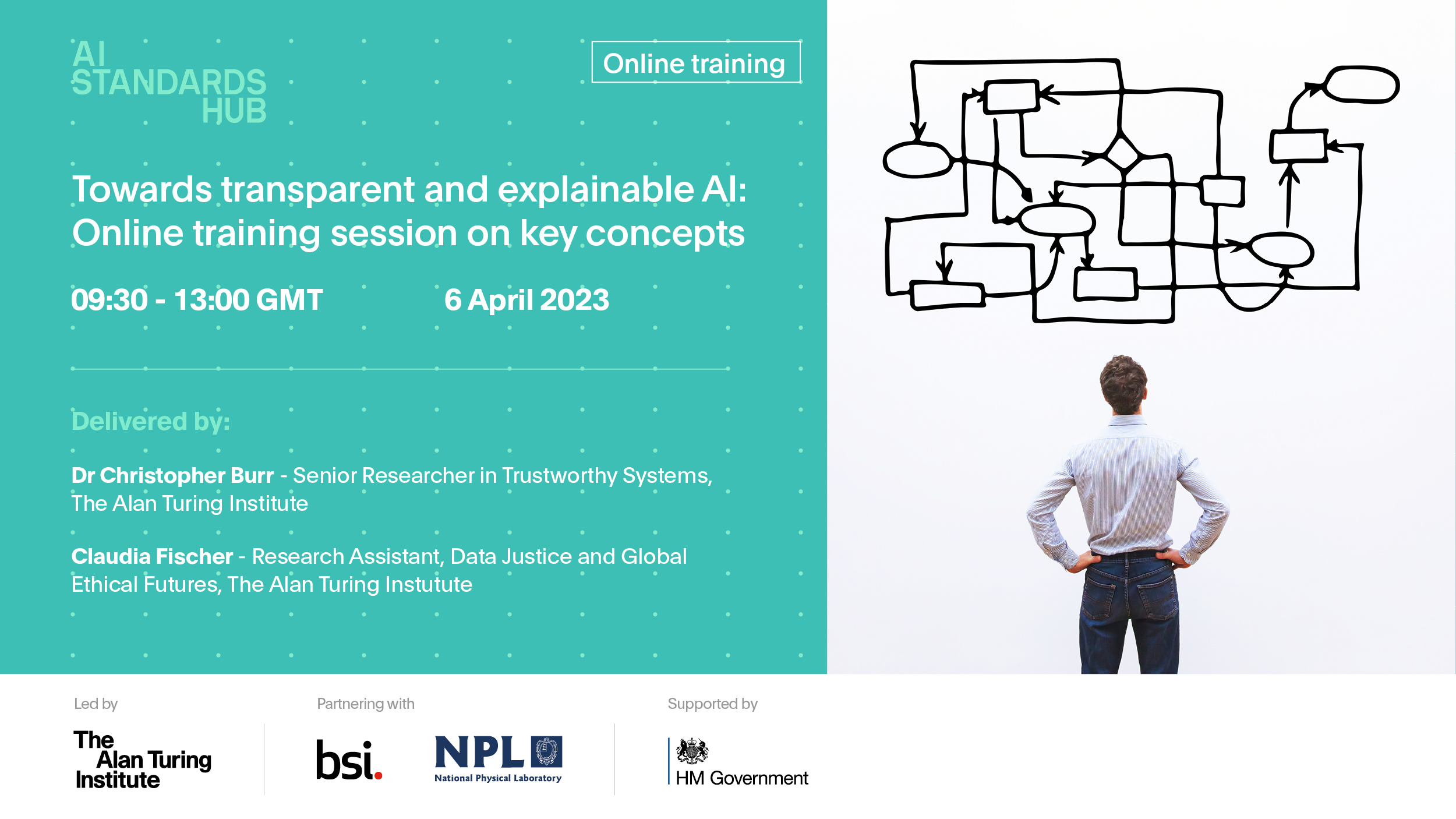

Towards transparent and explainable AI: Online training session on key concepts

Date:

Time:

– 1:00 pm

Location:

Online

Description

This training event is now fully subscribed. If you would like to stay informed about our future events, please sign up to our bi-weekly newsletter.

Transparency and explainability are key considerations for trustworthy AI. Continuing the Hub’s activity strand on these topics (including our recent webinar and workshop) this interactive online training session will provide an introduction to key concepts and terminology related to transparency and explainability in the context of AI.

The session will explore a diverse range of facets, such as how to ensure transparent documentation and accountable governance across a project’s lifecycle, and the benefits and limits of different methods for achieving model interpretability. Facilitated by experts from The Alan Turing Institute, it will feature presentations combined with group discussions and structured interactive exercises designed to contextualise and ground the concepts and terminology covered.

Content will be designed to provide foundational and practice-oriented knowledge to enable participants from any stakeholder group to actively contribute to the advancement of transparent and explainable AI, including through the translation of this knowledge into the development of adequate standards for transparent and explainable AI.

The workshop does not assume any prior knowledge of transparent and explainable AI, but participants should have a general understanding of machine learning and AI concepts (such as the concepts of a model, algorithm, and classification task).

Key Information

Event Type: Online

Free to attend: Yes

Start time: 9:30 am

End time: 1:00 pm

Date: 6 Apr 2023